Abstract

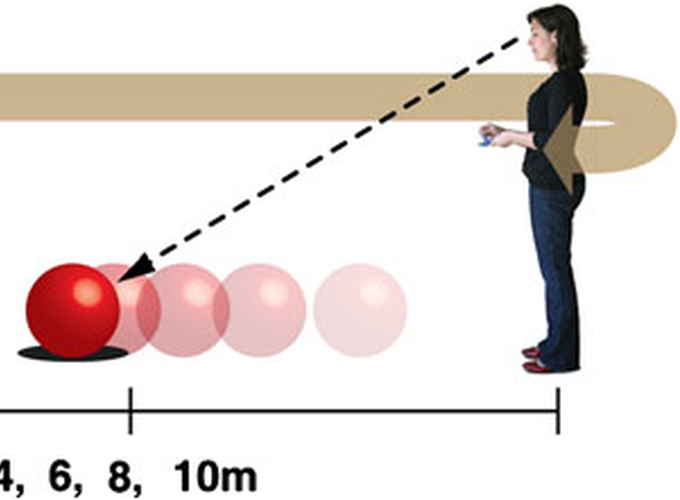

When walking through space, both dynamic visual information (optic flow) and body-based information (proprioceptive and vestibular) jointly specify the magnitude of distance travelled. While recent evidence has demonstrated the extent to which each of these cues can be used independently, less is known about how they are integrated when simultaneously present. Many studies have shown that sensory information is integrated using a weighted linear sum, yet little is known about whether this holds true for the integration of visual and body-based cues for travelled distance perception. In this study using Virtual Reality technologies, participants first travelled a predefined distance and subsequently matched this distance by adjusting an egocentric, in-depth target. The visual stimulus consisted of a long hallway and was presented in stereo via a head-mounted display. Body-based cues were provided either by walking in a fully tracked free-walking space (Exp. 1) or by being passively moved in a wheelchair (Exp. 2). Travelled distances were provided either through optic flow alone, body-based cues alone or through both cues combined. In the combined condition, visually specified distances were either congruent (1.09) or incongruent (0.79 or 1.49) with distances specified by body-based cues. Responses reflect a consistent combined effect of both visual and body-based information, with an overall higher influence of body-based cues when walking and a higher influence of visual cues during passive movement. When comparing the results of Experiments 1 and 2, it is clear that both proprioceptive and vestibular cues contribute to travelled distance estimates during walking. These observed results were effectively described using a basic linear weighting model.