VISUAL VESTIBULAR INTERACTIONS FOR SELF MOTION ESTIMATION

VISUAL VESTIBULAR INTERACTIONS FOR SELF MOTION ESTIMATION

Abstract

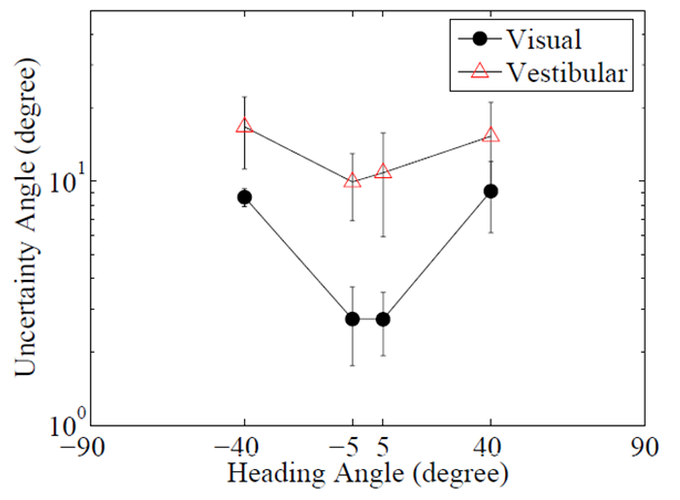

Accurate perception of self-motion through cluttered environments involves a coordinated set of sensorimotor processes that encode and compare information from visual, vestibular, proprioceptive, motor-corollary, and cognitive inputs. Our goal was to investigate the visual and vestibular cues to the direction of linear self-motion (heading direction). In the vestibular experiment, blindfolded participants were given two distinct forward linear translations, using a Stewart Platform, with identical acceleration profiles. One motion was a standard heading direction, while the test heading was randomly varied using the method of constant stimuli. The participants judged in which interval they moved further towards the right. In the visual-alone condition, participants were presented with two intervals of radial optic flow stimuli and judged which of the two intervals represented a pattern of optic flow consistent with more rightward self-motion. From participants’ responses, we compute psychometric functions for both experiments, from which we can calculate the participant’s uncertainty in heading direction estimates.